Enable agent to use general LLM knowledge

This step-by-step guide explains how to enable general Large Language Model (LLM) knowledge for your agent.

Use general LLM knowledge

This feature gives your agent access to broader, pre-trained knowledge from the underlying Large Language Model (LLM), allowing it to answer questions that may not be covered in your uploaded content.

What this feature does

When enabled, your agent can:

- Answer general or unrelated questions using the LLM’s base knowledge

- Fill in gaps if content is missing in your provided data

- Respond more flexibly across a wider range of topics

This is useful if you expect questions beyond your uploaded documents or want the agent to behave more like a general-purpose AI.

Risks and limitations of enabling this setting

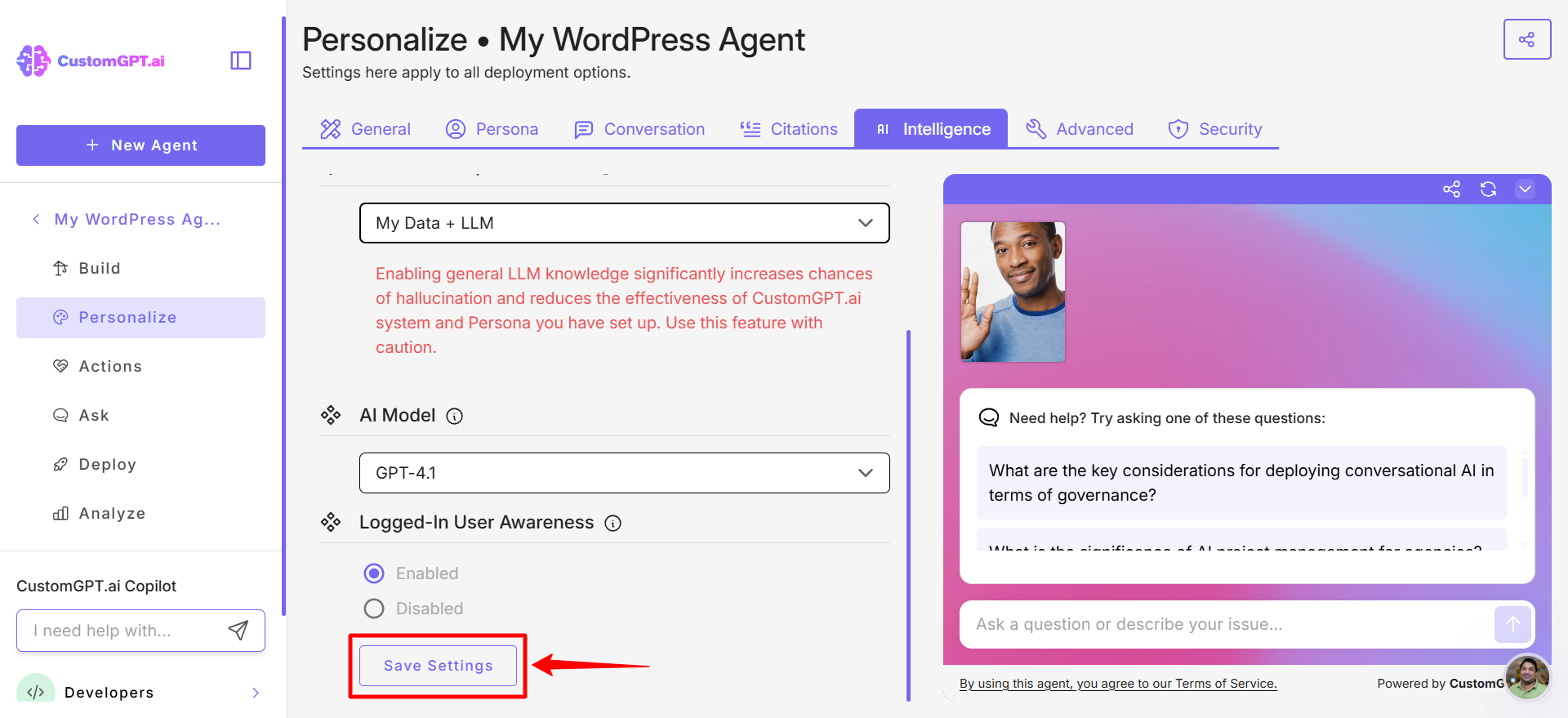

Enabling general LLM knowledge significantly increases chances of hallucination and reduces the effectiveness of the CustomGPT.ai system and persona you have set up.

What this means:

- The agent may generate responses that are not based on your data

- It may contradict your brand tone, facts, or Persona settings

- There's an increased chance of hallucinations - answers that sound correct but are factually wrong or made up.

Warning:Enable this only if you're comfortable trading precision for broader question handling!

How to enable this feature

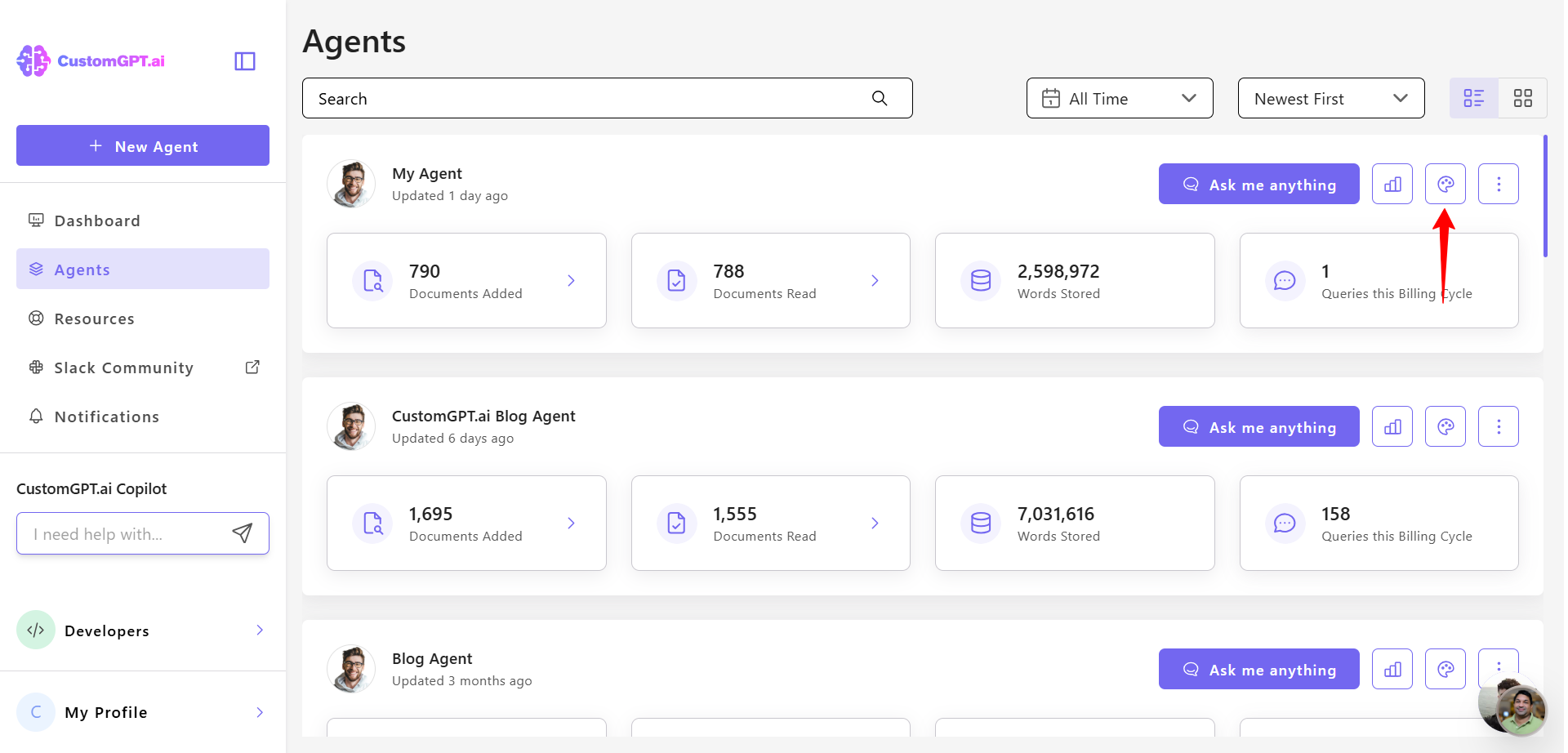

- In the CustomGPT.ai app, go to Personalize from the left menu.

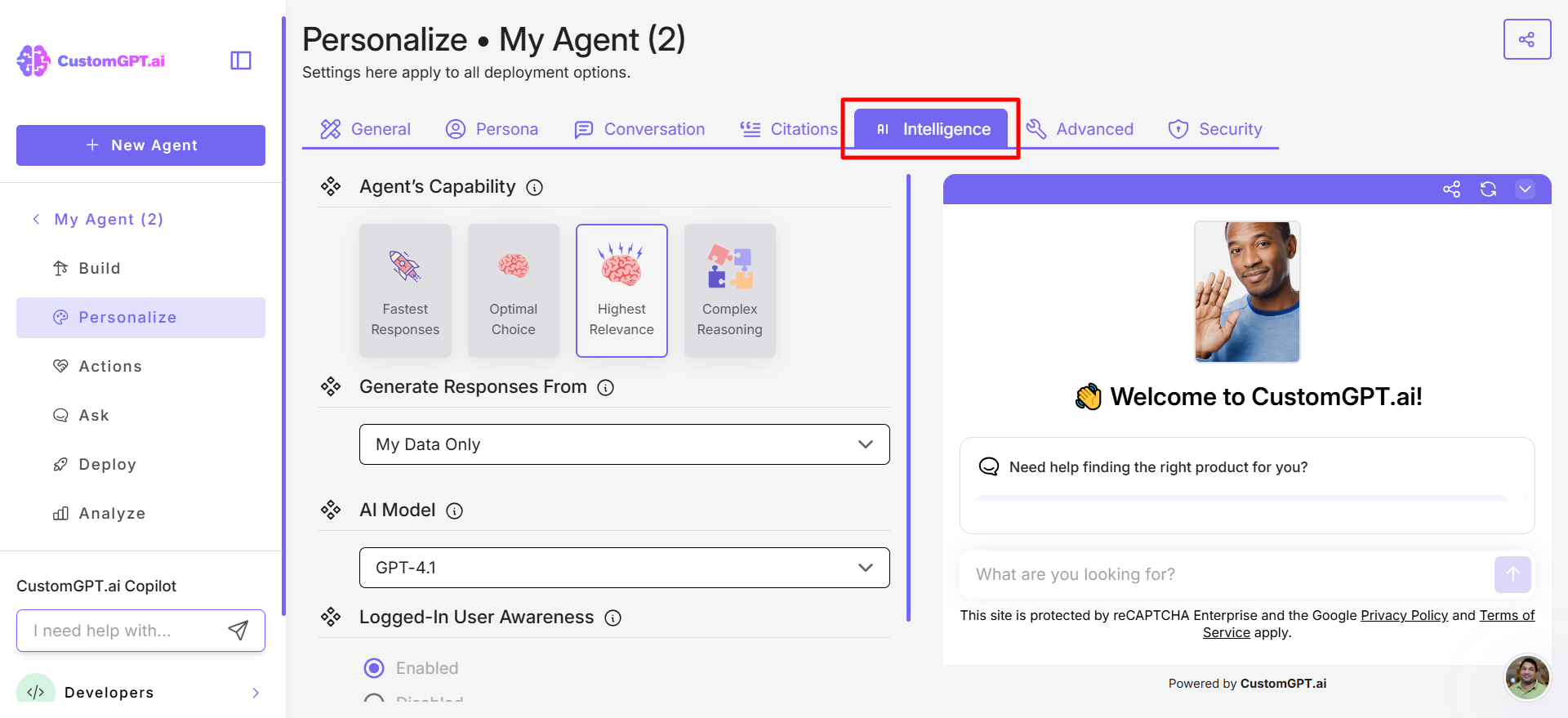

- Click on the Intelligence tab.

- Scroll to Generate responses from section.

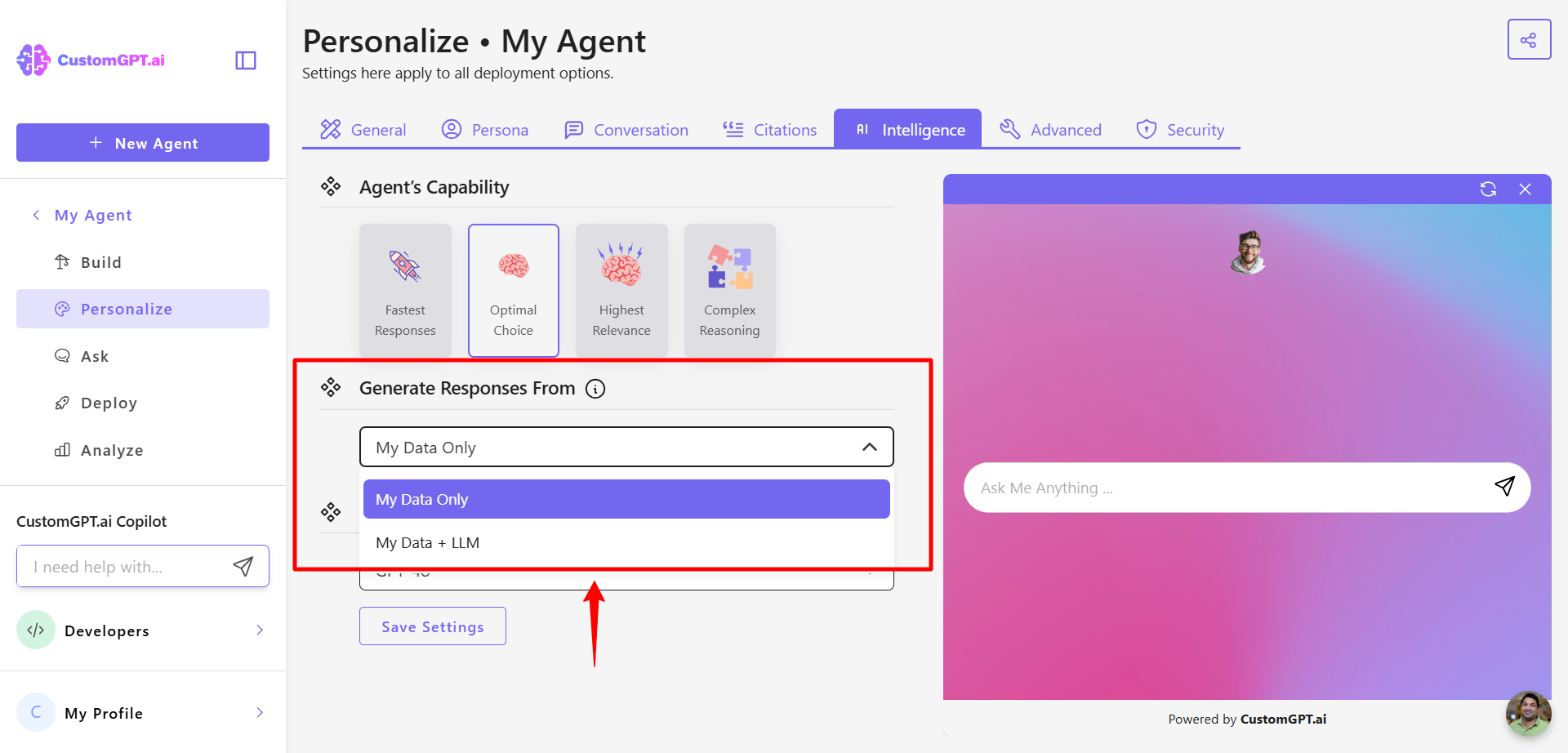

- From the dropdown menu, select My Data + LLM.

Warning:Use this feature with caution. Enabling general LLM knowledge significantly increases chances of hallucination and reduces the effectiveness of the CustomGPT.ai system and Persona you have set up!

- Click Save Settings to apply the changes.

For more information on best practices, see:

👉 How CustomGPT.ai agents defend against prompt injection and hallucinations.

Updated 15 days ago